Until now we have

seen the windows memory addressing concepts.

http://sqlandmorewithkruti.blogspot.in/2016/02/memory-fundamentals-for-sql-server-awe.htmlSo how exactly are user applications like SQL server assigned to this space. To understand this we need to see how the application is broken in terms of processes and threads.

Process

Threads

Quantum

Amount of time a thread is allowed to execute on the processor. When the Quantum is reached or until a higher priority thread interrupts the thread is interrupted and another thread is allowed to run on the processor. This movement of thread out of processor is called Yielding.

Context Switching

Context Switching

When a new thread is selected to run on a processor if the existing thread is interrupted or if the quantum is met some piece of information is saved and loaded next time the thread is back on the CPU for execution. This information consists of data in the volatile registers and other program counters. This process of saving clearing and loading data between thread switching is called context switching.

Thread States

A thread can exists in any of the below states

0 Initialized

1 Ready— waiting on a processor

2 Running— currently using a processor

3 Standby— about to use a processor

4 Terminated— has been stopped

5 Wait— waiting on a peripheral operation or a resource

6 Transition— waiting for resource in order to execute

7 Unknown

Windows allows threads to be suspended and resumed based on Windows API's. If a thread suspends itself for a specified period of time its called a sleeping thread. Else a suspended thread cannot explicitly resume itself until intervened by another thread.

0 Initialized

1 Ready— waiting on a processor

2 Running— currently using a processor

3 Standby— about to use a processor

4 Terminated— has been stopped

5 Wait— waiting on a peripheral operation or a resource

6 Transition— waiting for resource in order to execute

7 Unknown

Windows allows threads to be suspended and resumed based on Windows API's. If a thread suspends itself for a specified period of time its called a sleeping thread. Else a suspended thread cannot explicitly resume itself until intervened by another thread.

Thread priorities

Windows allocates priorities to thread which govern their behaviour for yielding and context switching.Threads begin life inheriting the base priority of their process. This priority can be set when the process is first created.

Fibers

Fibers are a user-space construct. Its execution is not managed by kernel like threads but they follow. Cooperative scheduling Windows fibers have less overhead associated with them than threads do. One may also call them lightweight threads.Certain components of SQL Server don’t work, or don’t work well, when SQL Server runs in fiber mode. These components include SQLMail and SQLXML. Other components, such as heterogeneous and CLR queries, are not supported at all in fiber mode because they need certain thread-specific facilities provided by Windows.CPU Execution Models

Here one thread is executed as the name suggest per CPU processor.

Multi/Hyper-Threaded Execution Model

Here multiple threads are executed as the name suggest per CPU processor.

CPU Scheduling

CPU scheduling decisions take place under one of four conditions:

- When a process switches from the running state to the waiting state, such as for an I/O request or invocation of the wait( ) system call.

- When a process switches from the running state to the ready state, for example in response to an interrupt.

- When a process switches from the waiting state to the ready state, say at completion of I/O or a return from wait( ).

- When a process terminates.

For conditions 2 and 3 there is a choice - To either continue running the current process, or select a different one.

If scheduling takes place only under conditions 1 and 4, the system is said to be non-preemptive, or cooperative. Under these conditions, once a process starts running it keeps running, until it either voluntarily blocks or until it finishes. With non-preemptive scheduling, the code that’s being run is written to yield control at key points. At these yield points, the scheduler can determine whether a different task should be run.Otherwise the system is said to be preemptive. Operating system can interrupt the thread execution any time in preemptive scheduling.

CPU Architecture

Symmetric Multiprocessing

In a symmetric multiprocessing (SMP) architecture, all processors are connected to all of the memory through a shared system bus. Performance is limited by the bus bandwidth.

NonUniform Memory Access

A non-uniform memory access (NUMA) architecture refers to a system where there the total available memory is broken down into multiple nodes. A group of processors are also connected directly to each of these nodes. If a processor is accessing memory on the same node at which it is located its accessing "local" memory pool. If the processor is accessing memory from a different node its accessing remote or non-local memory. Thus it reduces in cost of using shared memory bus but there comes additional higher cost of accessing remote memory.

CPU Node

This is a logical unit within SQLOS where in which CPU's are grouped. On an SMP system, all CPUs would be grouped under a single CPU node, whereas on a NUMA-based system, there would be as many CPU nodes as the system supported.

Memory Node

This is a logical unit within SQLOS where in which memory access. There is a memory node for each set of CPUs to localize memory. On an SMP architecture, a memory node shares memory across all CPUs, whereas on a NUMA architecture, a memory node per NUMA node exists. Memory nodes share the memory allocated by Max Server Memory.

SQLOS options in SQL server

Connections, SPID's , Workers

When SQL server receives a new connection request an SPID is assigned. Next the scheduler gets assigned to the SPID . The connection is run on the least loaded scheduler on that node. Until SQL server 2008 the connection was scheduler bound. When subsequent requests are sent from the same SPID, if another scheduler has a load factor that is less than a certain percentage it gets assigned to the new worker for the same SPID.

In a SQL Server environment, one of the optimizations that SQL Server can choose is to use parallelization in a query plan. If the available processors are hyper-threaded, then they are not really separate processors; and because each thread in a parallel plan needs to update its status to a single set of data structures, on a system with Hyper-Threading enabled, a parallel plan can see a severe performance degradation.

SQLOS is a thin layer that sits between SQL Server and Windows. It is responsible for scheduling, I/O completion, memory management and resource management. A question may arise when windows already has its own memory management unit why is there a need for another user-mode layer. Reasons being

Tasks

Any work assigned for SQL server to be carried out or executed is called a task. They are different from SQL server SPID's. A SPID is merely a connection or channel over which requests can be sent. Not necessary an SPID might have any active task assigned. A batch can map to one or more tasks. Tasks are further broken down to Workers. Similar to a process being broken down to threads.

Worker

UMS worker encapsulates a thread or fiber & carries out the tasks within the SQL server. On receiving any new tasks a worker gets assigned to the tasks and is not released till the completion or termination of the task. If any of the worker is free from the pool it gets assigned to the new tasks. Else if the max worker threads value has not been reached, SQL Server will create and allocate a new worker. When the server is in thread mode and a worker has been idle for 15 minutes, SQL Server destroys it, provided doing so will not reduce the number of workers below a predefined threshold.

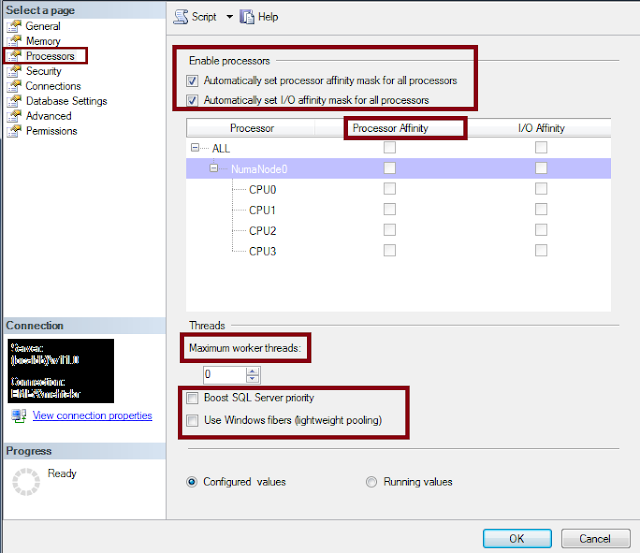

The Scheduler

When SQL server starts one scheduler is created for each processor on the system. So why do we have the option for processor affinity mask. Each scheduler is set to either ONLINE or OFFLINE based on this option. By default this is automatically configured and all the schedulers are supposed to be online. These schedulers picks up workers from the worker pool for execution. The workers are evenly distributed.

SQLOS

SQLOS is a thin layer that sits between SQL Server and Windows. It is responsible for scheduling, I/O completion, memory management and resource management. A question may arise when windows already has its own memory management unit why is there a need for another user-mode layer. Reasons being- Optimizing the way threads are chosen in terms of priority to be executed by schedulers

- Greater flexibility and control on underlying architecture.

- To avoid Context Switching

- Provision of extended diagnostic capabilities such as DAC and DMVs for better troubleshooting.

- Optimization of Windows performance when running SQL server.

Any work assigned for SQL server to be carried out or executed is called a task. They are different from SQL server SPID's. A SPID is merely a connection or channel over which requests can be sent. Not necessary an SPID might have any active task assigned. A batch can map to one or more tasks. Tasks are further broken down to Workers. Similar to a process being broken down to threads.

Worker

UMS worker encapsulates a thread or fiber & carries out the tasks within the SQL server. On receiving any new tasks a worker gets assigned to the tasks and is not released till the completion or termination of the task. If any of the worker is free from the pool it gets assigned to the new tasks. Else if the max worker threads value has not been reached, SQL Server will create and allocate a new worker. When the server is in thread mode and a worker has been idle for 15 minutes, SQL Server destroys it, provided doing so will not reduce the number of workers below a predefined threshold.

The Scheduler

When SQL server starts one scheduler is created for each processor on the system. So why do we have the option for processor affinity mask. Each scheduler is set to either ONLINE or OFFLINE based on this option. By default this is automatically configured and all the schedulers are supposed to be online. These schedulers picks up workers from the worker pool for execution. The workers are evenly distributed.

CPU Node

This is a logical unit within SQLOS where in which CPU's are grouped. On an SMP system, all CPUs would be grouped under a single CPU node, whereas on a NUMA-based system, there would be as many CPU nodes as the system supported.

Memory Node

This is a logical unit within SQLOS where in which memory access. There is a memory node for each set of CPUs to localize memory. On an SMP architecture, a memory node shares memory across all CPUs, whereas on a NUMA architecture, a memory node per NUMA node exists. Memory nodes share the memory allocated by Max Server Memory.

SQLOS options in SQL server

Connections, SPID's , Workers

When SQL server receives a new connection request an SPID is assigned. Next the scheduler gets assigned to the SPID . The connection is run on the least loaded scheduler on that node. Until SQL server 2008 the connection was scheduler bound. When subsequent requests are sent from the same SPID, if another scheduler has a load factor that is less than a certain percentage it gets assigned to the new worker for the same SPID.

So how exactly SQLOS puts up with the OS preemptive scheduling.

Each thread under SQLOS has an associated event object. If a thread is in wait state Windows will not consider it for scheduling. SQLOS puts workers(threads/fibers) to sleep until it wants it to be scheduled by making a call to WaitForSingleObject on their corresponding event object. It passes INFINITE as the parameter for the timeout value.

When it should be scheduled SQLOS the object associated with the worker will be signaled. This allows the worker thread to come out of its wait state and windows scheduler will now be able to place the thread based on scheduling to on of the processors. SQLOS relies on the fact that the workers, (threads or fibers) to yield voluntarily often enough so one process or thread doesn’t have exclusive control of the system. However it still adheres to a concept of a quantum. Instead of SQL Server tasks being forced to give up the CPU by the operating system, SQL Server tasks can request to be put on a wait queue periodically, and if they have exceeded the internally defined quantum, and they are not in the middle of an operation that cannot be stopped, they will voluntarily relinquish the CPU.

SQLOS as such is a vast topic to be covered under one post but the above gives a fair idea of its functionality and advantages. Below are a few links for the same.

http://blogs.msdn.com/b/sqlmeditation/archive/2012/12/13/tasks-worker-threads-scheduler-threads-explain-it-all-and-dmvs.aspx

https://technet.microsoft.com/en-us/library/ms189267(v=sql.105).aspx

Each thread under SQLOS has an associated event object. If a thread is in wait state Windows will not consider it for scheduling. SQLOS puts workers(threads/fibers) to sleep until it wants it to be scheduled by making a call to WaitForSingleObject on their corresponding event object. It passes INFINITE as the parameter for the timeout value.

When it should be scheduled SQLOS the object associated with the worker will be signaled. This allows the worker thread to come out of its wait state and windows scheduler will now be able to place the thread based on scheduling to on of the processors. SQLOS relies on the fact that the workers, (threads or fibers) to yield voluntarily often enough so one process or thread doesn’t have exclusive control of the system. However it still adheres to a concept of a quantum. Instead of SQL Server tasks being forced to give up the CPU by the operating system, SQL Server tasks can request to be put on a wait queue periodically, and if they have exceeded the internally defined quantum, and they are not in the middle of an operation that cannot be stopped, they will voluntarily relinquish the CPU.

SQLOS as such is a vast topic to be covered under one post but the above gives a fair idea of its functionality and advantages. Below are a few links for the same.

http://blogs.msdn.com/b/sqlmeditation/archive/2012/12/13/tasks-worker-threads-scheduler-threads-explain-it-all-and-dmvs.aspx

https://technet.microsoft.com/en-us/library/ms189267(v=sql.105).aspx

https://technet.microsoft.com/en-us/library/ms176043(v=sql.105).aspx

https://technet.microsoft.com/en-us/library/ee210547(v=sql.105).aspx

https://msdn.microsoft.com/en-in/library/ms178074.aspx

https://mssqlwiki.com/2013/01/10/sql-server-operating-system-sos-series-1/

https://technet.microsoft.com/en-us/library/ee210547(v=sql.105).aspx

https://msdn.microsoft.com/en-in/library/ms178074.aspx

https://mssqlwiki.com/2013/01/10/sql-server-operating-system-sos-series-1/

https://blogs.msdn.microsoft.com/sqljourney/2012/12/16/an-in-depth-look-at-sql-server-memorypart-1/

In the next blogs we shall see the segregation of memory in terms of SQL server

In the next blogs we shall see the segregation of memory in terms of SQL server

ReplyDeleteit’s ok to show some appreciation and say ‘great post’

.NET developer

Brilliant Post! Finally found a blog with important SQL Server internals in one page! :)

ReplyDeleteThanks Bruce

Delete